Artificial intelligence (AI) and machine learning (ML) have the power to deliver business value and impact across a wide range of use cases, which has led to their rapidly increasing deployment across verticals. For example, the financial services industry is investing significantly in leveraging machine learning to monetize data assets, improve customer experience and enhance operational efficiencies. According to the World Economic Forum's 2020 "Global AI in Financial Services Survey," AI and ML are expected to "reach ubiquitous importance within two years."

However, as the rise and adoption of AI/ML parallels that of global privacy demand and regulation, businesses must be mindful of the security and privacy considerations associated with leveraging machine learning. The implications of these regulations affect the collaborative use of AI/ML not only between entities but also internally, as they limit an organization's ability to use and share data between business segments and jurisdictions. For a global bank, this could mean it's prohibited from leveraging critical data assets from another country or region to evaluate ML models. This limitation on data inputs can directly affect the effectiveness of the model itself and the scope of its use.

The privacy and security implications of leveraging ML are wide and broad and are often the ultimate purview of the governance or risk management functionalities of the organization. ML governance encompasses the visibility, explainability, interpretability and reproducibility of the entire machine learning process, including the data, outcomes and machine learning artifacts. Most often, the core focus of ML governance is on protecting and understanding the ML model itself.

In its simplest form, an ML model is a mathematical representation/algorithm that uses input data to compute a set of results that could include scores, predictions or recommendations. ML models are unique in that they are trained (supervised ML) or learn (unsupervised ML) from a set of data in order to produce high-quality, meaningful results. A good deal of effort goes into effective model creation, and thus models are often considered to be intellectual property and valuable assets of the organization.

In addition to protecting ML models based on their IP merits, models must be protected from a privacy standpoint. In many business applications, effective ML models are trained on sensitive data often covered by privacy regulations, and any vulnerability of the model itself is a direct potential liability from a privacy or regulatory standpoint.

Thus, ML models are valuable — and vulnerable. Models can be reverse engineered to extract information about the organization, including the data on which the model was trained, which may contain PII, IP or other sensitive/regulated material that could damage the organization if exposed. There are two particular model-centric vulnerabilities with significant privacy and security implications: model inversion and model spoofing attacks.

In a model inversion attack, the data over which the model was trained can be inferred or extracted from the model itself. This could result in leakage of sensitive data, including data covered by privacy regulations. Model spoofing is a type of adversarial machine learning attack that attempts to fool the model into making the incorrect decision through malicious input. The attacker observes or "learns" the model and then can alter the input data, often imperceptibly, to "trick" it into making the decision that is advantageous for the attacker. This can have significant implications for common machine learning use cases, such as identity verification.

These types of vulnerabilities affirm that ML models must be considered organizational assets that should be protected with the same vigilance as any other type of IP. Failure to adequately protect the model limits the applications for which it can be used or, in some cases, could lead the governance and risk team to prohibit its use entirely. Historically, there have been two options to address this exposure:

1. Machine learning analysis using sensitive content is not performed in external or cross-jurisdictional datasets, limiting the intelligence value of the data source and model.

2. Sensitive models are deployed despite the risk. This approach is not feasible in most circumstances due to regulatory barriers.

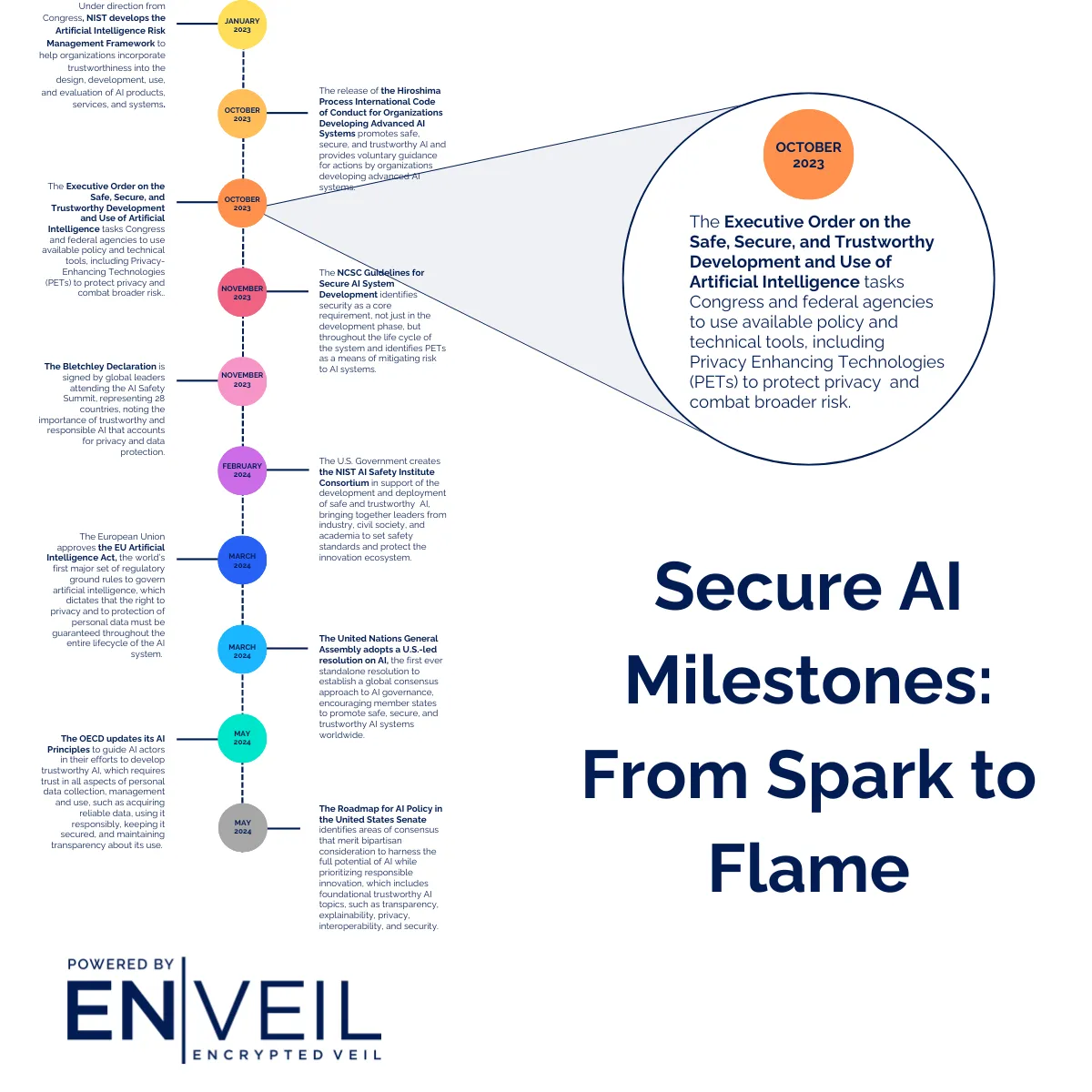

However, recent advancements in the area of privacy-enhancing technologies (PETs) are serving to address these challenges uniquely and enable privacy-preserving machine learning. This category of technologies works to preserve the privacy of data throughout its life cycle and enables data to be used securely and privately. For organizations, PETs are an innovative path to extracting critical insights and pursuing data collaboration practices, such as machine learning, while remaining in compliance.

When it comes to privacy-preserving machine learning, these powerful technologies — such as homomorphic encryption — allow organizations to encrypt an ML model and evaluate it in its encrypted state, thus removing the privacy and security vulnerabilities. This creates a new paradigm for secure and private machine learning and decisioning across organizations and jurisdictions, enabling the decentralization of data sharing as data owners can collaborate while retaining positive and auditable control of their data assets. When considering whether to use privacy-preserving machine learning, business leaders should evaluate the sensitivity of the data involved and the potential value gained by broadening the scope of the model's use.

Organizations increasingly rely on machine learning to generate insights, make predictions and improve outcomes. However, any effective use of AI/ML must prioritize security and privacy. Privacy-preserving machine learning using breakthrough PETs can allow organizations to reap the benefits of machine learning and broaden their capacity to derive insights for improved intelligence-led decision-making without exposing IP, PII or other organizationally sensitive information. By limiting this risk, the focus can remain on the business benefits of the results derived rather than the risks inherent in the ML model itself.

Read the full article at Forbes.