Excerpt:

"Reflecting on 2023, it would be challenging to find any topic that generated more interest and concern than artificial intelligence. We started the year with a flood of ChatGPT content and the steady drumbeat around generative AI continued, culminating with the recent flood of news surrounding OpenAI's leadership challenges.

For the most part, themes of "faster," "easier" and "more efficient" dominated AI-related headlines, but if you listened closely, there was an occasional voice of warning reminding users, especially enterprise users, to be cautious and mindful of the risks associated with AI implementation and that things that seem too good to be true often are. The call for mindfulness around responsible, safe and trustworthy AI grew stronger last month with bold statements and directives from globally-influential groups including G7 Leaders, the White House, and a wide cross section of representatives from 28 countries who attended the U.K.'s AI Safety Summit.

While these actions and the surrounding commentary they generate drive increased consideration by organizations looking to leverage AI for business purposes, we still face a very real chasm of understanding. The AI sphere is complicated, untested and unclear, both from a technical and policy perspective. Devising sustainable long-term strategies around Secure AI requires bringing a wide-range of stakeholders to the table, and the best foundation for such discussion is shared understanding. To help facilitate that engagement — of which we need much more — we need to get back to the basics: breaking down the definition of secure AI, the technology behind it, and where we see it successfully applied today.

While the terms AI and machine learning can be used to describe numerous use cases across a broad range of industries, the majority of these actions center around using machines and data to improve or advance the status quo. To deliver the best outcomes, AI and machine learning capabilities need to be trained, enriched and leveraged over a broad, diverse range of data sources. At its core, Secure AI comes down to trust, risk and security relating to that data.

When a machine learning model is trained on new and disparate datasets, it becomes smarter over time, resulting in increasingly accurate and valuable insights that were previously inaccessible. However, these models encode the data over which they were trained. When the data sources contain sensitive information, using these models raises significant concerns as adversarial machine learning attacks designed to glean the sensitive information are low-hanging fruit. This makes any technical or security vulnerability relating to the model itself while it's being leveraged or trained a significant liability. The AI functionality that promised to deliver business-enhancing, actionable insights now substantially increases the organization's risk profile. AI users must acknowledge this risk and act to mitigate its impact.

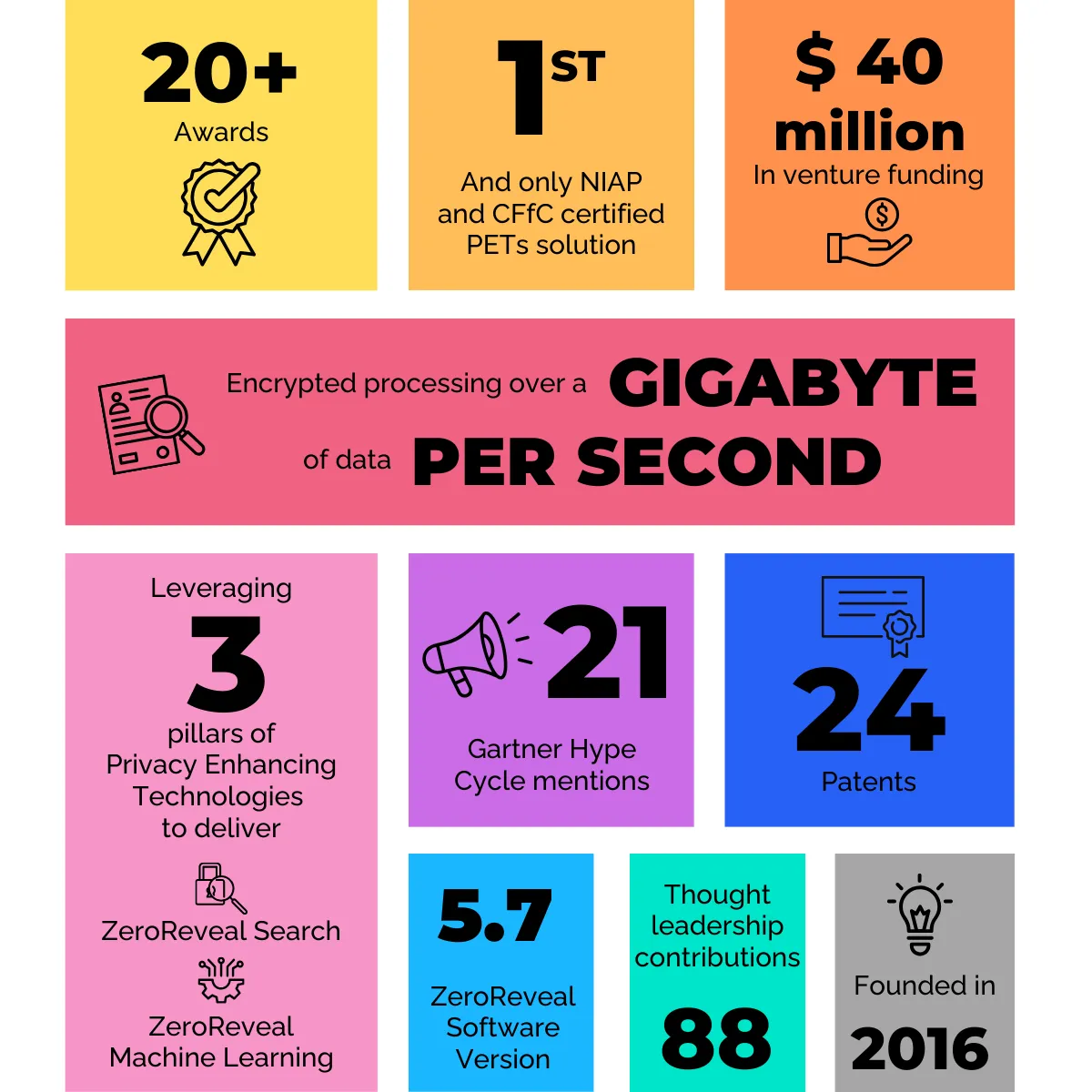

One of the best ways to counter the increased risk associated with AI applications is to incorporate the use of Privacy Enhancing Technologies. As the name suggests, this family of technologies enhance, enable and preserve the privacy of data throughout its life cycle. They uniquely protect data while it's being used or processed, thereby securing the usage of data. This is critical for organizations looking to capitalize on the power of AI as machine learning capabilities are data-driven — and data hungry."

Read the full article as published by IAPP here.